outlets <- readRDS("../data/outlets.rds") # load dataset

head(outlets) |> kable() # first six observations of the dataset| population | profit |

|---|---|

| 6.1101 | 17.5920 |

| 5.5277 | 9.1302 |

| 8.5186 | 13.6620 |

| 7.0032 | 11.8540 |

| 5.8598 | 6.8233 |

| 8.3829 | 11.8860 |

What do we call these:

What is our goal in supervised learning?

From ISLR2

From ISLR2

From ISLR2

From ISLR2

Suppose the CEO of a restaurant franchise is considering opening new outlets in different cities. They would like to expand their business to cities that give them higher profits with the assumption that highly populated cities will probably yield higher profits.

They have data on the population (in 100,000) and profit (in $1,000) at 97 cities where they currently have outlets.

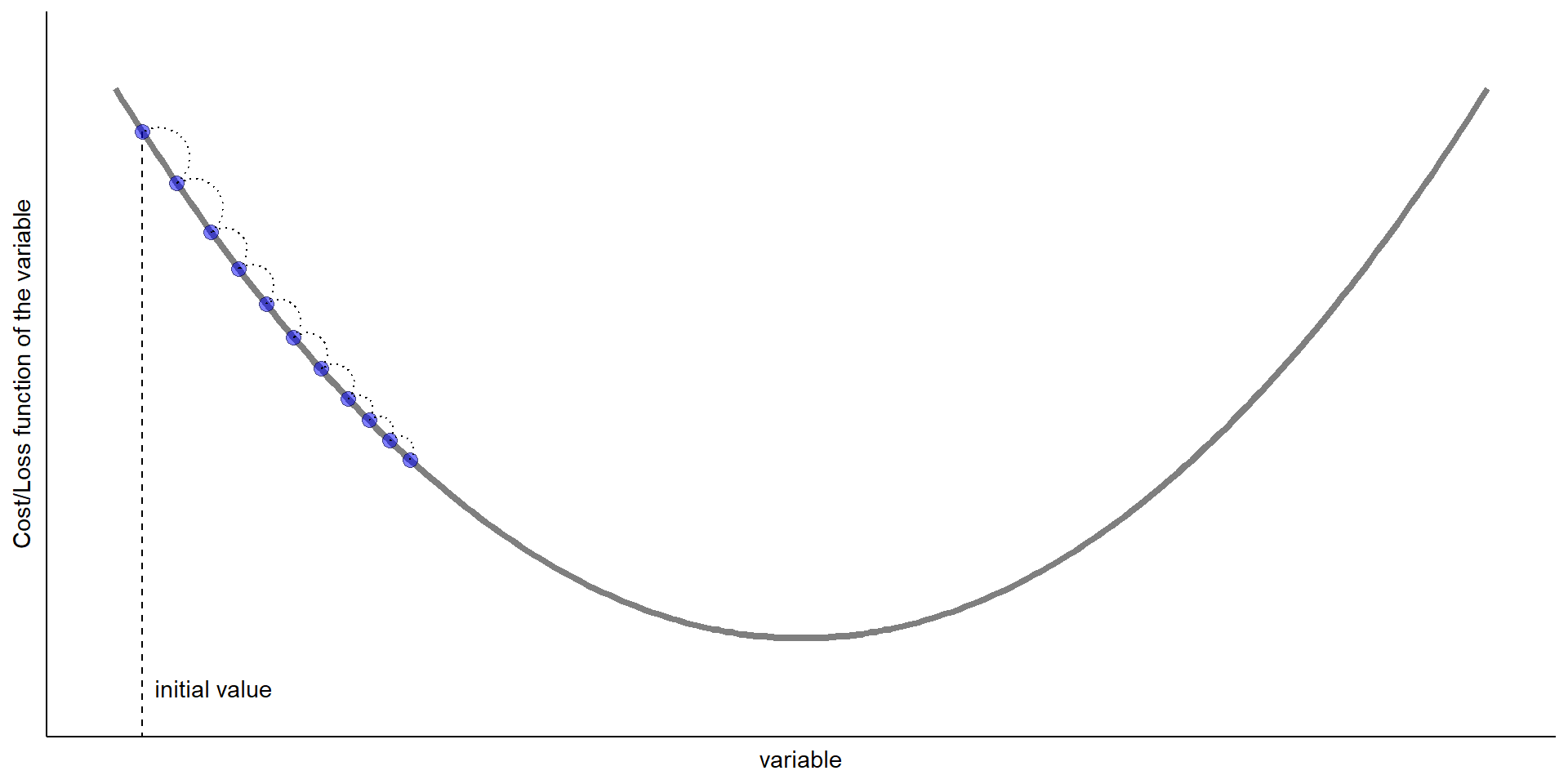

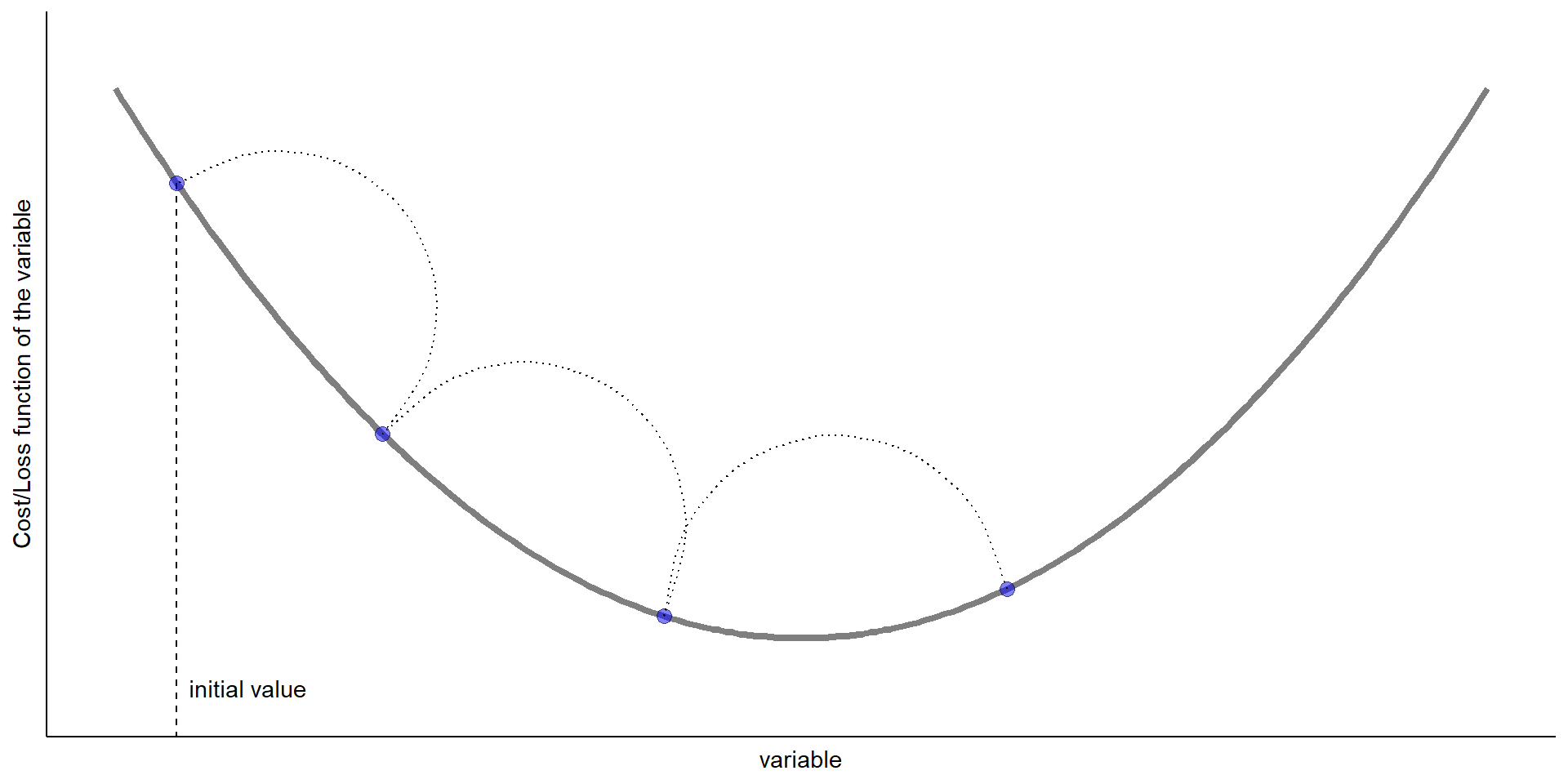

population and profit betterUpdates to the parameter: \[ \begin{aligned} \text{new value of parameters} &= \text{old value of parameters}\\ &\qquad- \text{step size} \times \text{gradient of function w.r.t. parameters} \end{aligned} \]