MATH 427: Preprocessing, Missing Data, and Resampling

Eric Friedlander

Computational Set-Up

Repeated Cross-Validation

Repeated K-Fold Cross-Validation (CV)

- Partition your data into \(K\) randomly selected non-overlapping “folds”

- Folds don’t overlap and every training observation is in one fold

- Each fold contained \(1/K\) of the training data

- Looping through the folds \(k = 1, \ldots, K\):

- Treat fold \(k\) as the assessment set

- Treat all folds except for \(k\) as the analysis set

- Fit model to analysis set (use whole modeling workflow)

- Compute error metrics on assessment set

- After loop, you will have \(K\) copies of each error metrics

- Average them together to get performance estimate

- Can also look at distribution of performance metrics

- Repeat this process from the beginning selected \(K\) different randomly chosen folds

- If we want more accurate estimate you can perform repeated CV with different randomly chosen folds

CV Workflow in R

Data: Ames Housing Prices

A data set from De Cock (2011) has 82 fields were recorded for 2,930 properties in Ames IA. This version is copies from the AmesHousing package but does not include a few quality columns that appear to be outcomes rather than predictors.

Goal: Predict Sale_Price.

Rows: 2,930

Columns: 74

$ MS_SubClass <fct> One_Story_1946_and_Newer_All_Styles, One_Story_1946…

$ MS_Zoning <fct> Residential_Low_Density, Residential_High_Density, …

$ Lot_Frontage <dbl> 141, 80, 81, 93, 74, 78, 41, 43, 39, 60, 75, 0, 63,…

$ Lot_Area <int> 31770, 11622, 14267, 11160, 13830, 9978, 4920, 5005…

$ Street <fct> Pave, Pave, Pave, Pave, Pave, Pave, Pave, Pave, Pav…

$ Alley <fct> No_Alley_Access, No_Alley_Access, No_Alley_Access, …

$ Lot_Shape <fct> Slightly_Irregular, Regular, Slightly_Irregular, Re…

$ Land_Contour <fct> Lvl, Lvl, Lvl, Lvl, Lvl, Lvl, Lvl, HLS, Lvl, Lvl, L…

$ Utilities <fct> AllPub, AllPub, AllPub, AllPub, AllPub, AllPub, All…

$ Lot_Config <fct> Corner, Inside, Corner, Corner, Inside, Inside, Ins…

$ Land_Slope <fct> Gtl, Gtl, Gtl, Gtl, Gtl, Gtl, Gtl, Gtl, Gtl, Gtl, G…

$ Neighborhood <fct> North_Ames, North_Ames, North_Ames, North_Ames, Gil…

$ Condition_1 <fct> Norm, Feedr, Norm, Norm, Norm, Norm, Norm, Norm, No…

$ Condition_2 <fct> Norm, Norm, Norm, Norm, Norm, Norm, Norm, Norm, Nor…

$ Bldg_Type <fct> OneFam, OneFam, OneFam, OneFam, OneFam, OneFam, Twn…

$ House_Style <fct> One_Story, One_Story, One_Story, One_Story, Two_Sto…

$ Overall_Cond <fct> Average, Above_Average, Above_Average, Average, Ave…

$ Year_Built <int> 1960, 1961, 1958, 1968, 1997, 1998, 2001, 1992, 199…

$ Year_Remod_Add <int> 1960, 1961, 1958, 1968, 1998, 1998, 2001, 1992, 199…

$ Roof_Style <fct> Hip, Gable, Hip, Hip, Gable, Gable, Gable, Gable, G…

$ Roof_Matl <fct> CompShg, CompShg, CompShg, CompShg, CompShg, CompSh…

$ Exterior_1st <fct> BrkFace, VinylSd, Wd Sdng, BrkFace, VinylSd, VinylS…

$ Exterior_2nd <fct> Plywood, VinylSd, Wd Sdng, BrkFace, VinylSd, VinylS…

$ Mas_Vnr_Type <fct> Stone, None, BrkFace, None, None, BrkFace, None, No…

$ Mas_Vnr_Area <dbl> 112, 0, 108, 0, 0, 20, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6…

$ Exter_Cond <fct> Typical, Typical, Typical, Typical, Typical, Typica…

$ Foundation <fct> CBlock, CBlock, CBlock, CBlock, PConc, PConc, PConc…

$ Bsmt_Cond <fct> Good, Typical, Typical, Typical, Typical, Typical, …

$ Bsmt_Exposure <fct> Gd, No, No, No, No, No, Mn, No, No, No, No, No, No,…

$ BsmtFin_Type_1 <fct> BLQ, Rec, ALQ, ALQ, GLQ, GLQ, GLQ, ALQ, GLQ, Unf, U…

$ BsmtFin_SF_1 <dbl> 2, 6, 1, 1, 3, 3, 3, 1, 3, 7, 7, 1, 7, 3, 3, 1, 3, …

$ BsmtFin_Type_2 <fct> Unf, LwQ, Unf, Unf, Unf, Unf, Unf, Unf, Unf, Unf, U…

$ BsmtFin_SF_2 <dbl> 0, 144, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1120, 0…

$ Bsmt_Unf_SF <dbl> 441, 270, 406, 1045, 137, 324, 722, 1017, 415, 994,…

$ Total_Bsmt_SF <dbl> 1080, 882, 1329, 2110, 928, 926, 1338, 1280, 1595, …

$ Heating <fct> GasA, GasA, GasA, GasA, GasA, GasA, GasA, GasA, Gas…

$ Heating_QC <fct> Fair, Typical, Typical, Excellent, Good, Excellent,…

$ Central_Air <fct> Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, Y, …

$ Electrical <fct> SBrkr, SBrkr, SBrkr, SBrkr, SBrkr, SBrkr, SBrkr, SB…

$ First_Flr_SF <int> 1656, 896, 1329, 2110, 928, 926, 1338, 1280, 1616, …

$ Second_Flr_SF <int> 0, 0, 0, 0, 701, 678, 0, 0, 0, 776, 892, 0, 676, 0,…

$ Gr_Liv_Area <int> 1656, 896, 1329, 2110, 1629, 1604, 1338, 1280, 1616…

$ Bsmt_Full_Bath <dbl> 1, 0, 0, 1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 0, …

$ Bsmt_Half_Bath <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ Full_Bath <int> 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 1, 1, 3, 2, …

$ Half_Bath <int> 0, 0, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 0, …

$ Bedroom_AbvGr <int> 3, 2, 3, 3, 3, 3, 2, 2, 2, 3, 3, 3, 3, 2, 1, 4, 4, …

$ Kitchen_AbvGr <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

$ TotRms_AbvGrd <int> 7, 5, 6, 8, 6, 7, 6, 5, 5, 7, 7, 6, 7, 5, 4, 12, 8,…

$ Functional <fct> Typ, Typ, Typ, Typ, Typ, Typ, Typ, Typ, Typ, Typ, T…

$ Fireplaces <int> 2, 0, 0, 2, 1, 1, 0, 0, 1, 1, 1, 0, 1, 1, 0, 1, 0, …

$ Garage_Type <fct> Attchd, Attchd, Attchd, Attchd, Attchd, Attchd, Att…

$ Garage_Finish <fct> Fin, Unf, Unf, Fin, Fin, Fin, Fin, RFn, RFn, Fin, F…

$ Garage_Cars <dbl> 2, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3, 2, …

$ Garage_Area <dbl> 528, 730, 312, 522, 482, 470, 582, 506, 608, 442, 4…

$ Garage_Cond <fct> Typical, Typical, Typical, Typical, Typical, Typica…

$ Paved_Drive <fct> Partial_Pavement, Paved, Paved, Paved, Paved, Paved…

$ Wood_Deck_SF <int> 210, 140, 393, 0, 212, 360, 0, 0, 237, 140, 157, 48…

$ Open_Porch_SF <int> 62, 0, 36, 0, 34, 36, 0, 82, 152, 60, 84, 21, 75, 0…

$ Enclosed_Porch <int> 0, 0, 0, 0, 0, 0, 170, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0…

$ Three_season_porch <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ Screen_Porch <int> 0, 120, 0, 0, 0, 0, 0, 144, 0, 0, 0, 0, 0, 0, 140, …

$ Pool_Area <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ Pool_QC <fct> No_Pool, No_Pool, No_Pool, No_Pool, No_Pool, No_Poo…

$ Fence <fct> No_Fence, Minimum_Privacy, No_Fence, No_Fence, Mini…

$ Misc_Feature <fct> None, None, Gar2, None, None, None, None, None, Non…

$ Misc_Val <int> 0, 0, 12500, 0, 0, 0, 0, 0, 0, 0, 0, 500, 0, 0, 0, …

$ Mo_Sold <int> 5, 6, 6, 4, 3, 6, 4, 1, 3, 6, 4, 3, 5, 2, 6, 6, 6, …

$ Year_Sold <int> 2010, 2010, 2010, 2010, 2010, 2010, 2010, 2010, 201…

$ Sale_Type <fct> WD , WD , WD , WD , WD , WD , WD , WD , WD , WD , W…

$ Sale_Condition <fct> Normal, Normal, Normal, Normal, Normal, Normal, Nor…

$ Sale_Price <int> 215000, 105000, 172000, 244000, 189900, 195500, 213…

$ Longitude <dbl> -93.61975, -93.61976, -93.61939, -93.61732, -93.638…

$ Latitude <dbl> 42.05403, 42.05301, 42.05266, 42.05125, 42.06090, 4…Initial Data Split

Define Folds

# 10-fold cross-validation repeated 10 times

# A tibble: 100 × 3

splits id id2

<list> <chr> <chr>

1 <split [1977/220]> Repeat01 Fold01

2 <split [1977/220]> Repeat01 Fold02

3 <split [1977/220]> Repeat01 Fold03

4 <split [1977/220]> Repeat01 Fold04

5 <split [1977/220]> Repeat01 Fold05

6 <split [1977/220]> Repeat01 Fold06

7 <split [1977/220]> Repeat01 Fold07

8 <split [1978/219]> Repeat01 Fold08

9 <split [1978/219]> Repeat01 Fold09

10 <split [1978/219]> Repeat01 Fold10

# ℹ 90 more rowsDefine Model(s)

Define Preprocessing: Linear regression

lm_preproc <- recipe(Sale_Price ~ ., data = ames_train) |>

step_dummy(all_nominal_predictors()) |> # Convert categorical data into dummy variables

step_zv(all_predictors()) |> # remove zero-variance predictors (i.e. predictors with one value)

step_corr(all_predictors(), threshold = 0.5) |> # remove highly correlated predictors

step_lincomb(all_predictors()) # remove variables that have exact linear combinationsDefine Preprocessing: KNN

knn_preproc <- recipe(Sale_Price ~ ., data = ames_train) |> # only uses ames_train for data types

step_dummy(all_nominal_predictors(), one_hot = TRUE) |> # Convert categorical data into dummy variables

step_zv(all_predictors()) |> # remove zero-variance predictors (i.e. predictors with one value)

step_normalize(all_predictors())Define Workflows

Define Metrics

Fit and Assess Models

Collecting Metrics

| .metric | .estimator | mean | n | std_err | .config |

|---|---|---|---|---|---|

| rmse | standard | 3.308697e+04 | 100 | 519.8344225 | Preprocessor1_Model1 |

| rsq | standard | 8.293514e-01 | 100 | 0.0044723 | Preprocessor1_Model1 |

| .metric | .estimator | mean | n | std_err | .config |

|---|---|---|---|---|---|

| rmse | standard | 4.029708e+04 | 100 | 438.177121 | Preprocessor1_Model1 |

| rsq | standard | 7.480995e-01 | 100 | 0.004546 | Preprocessor1_Model1 |

| .metric | .estimator | mean | n | std_err | .config |

|---|---|---|---|---|---|

| rmse | standard | 3.827391e+04 | 100 | 431.911500 | Preprocessor1_Model1 |

| rsq | standard | 7.774301e-01 | 100 | 0.003866 | Preprocessor1_Model1 |

Final Model

- After choosing best model/workflow, fit on full training set and assess on test set

Extracting CV Metrics

collect_metricsaverages over all 100 models- set

summarize = FALSEto get all the individual errors

| id | id2 | .metric | .estimator | .estimate | .config |

|---|---|---|---|---|---|

| Repeat01 | Fold01 | rmse | standard | 3.879626e+04 | Preprocessor1_Model1 |

| Repeat01 | Fold01 | rsq | standard | 8.281876e-01 | Preprocessor1_Model1 |

| Repeat01 | Fold02 | rmse | standard | 2.811550e+04 | Preprocessor1_Model1 |

| Repeat01 | Fold02 | rsq | standard | 8.581329e-01 | Preprocessor1_Model1 |

| Repeat01 | Fold03 | rmse | standard | 2.930500e+04 | Preprocessor1_Model1 |

| Repeat01 | Fold03 | rsq | standard | 8.533908e-01 | Preprocessor1_Model1 |

Combining CV Metrics

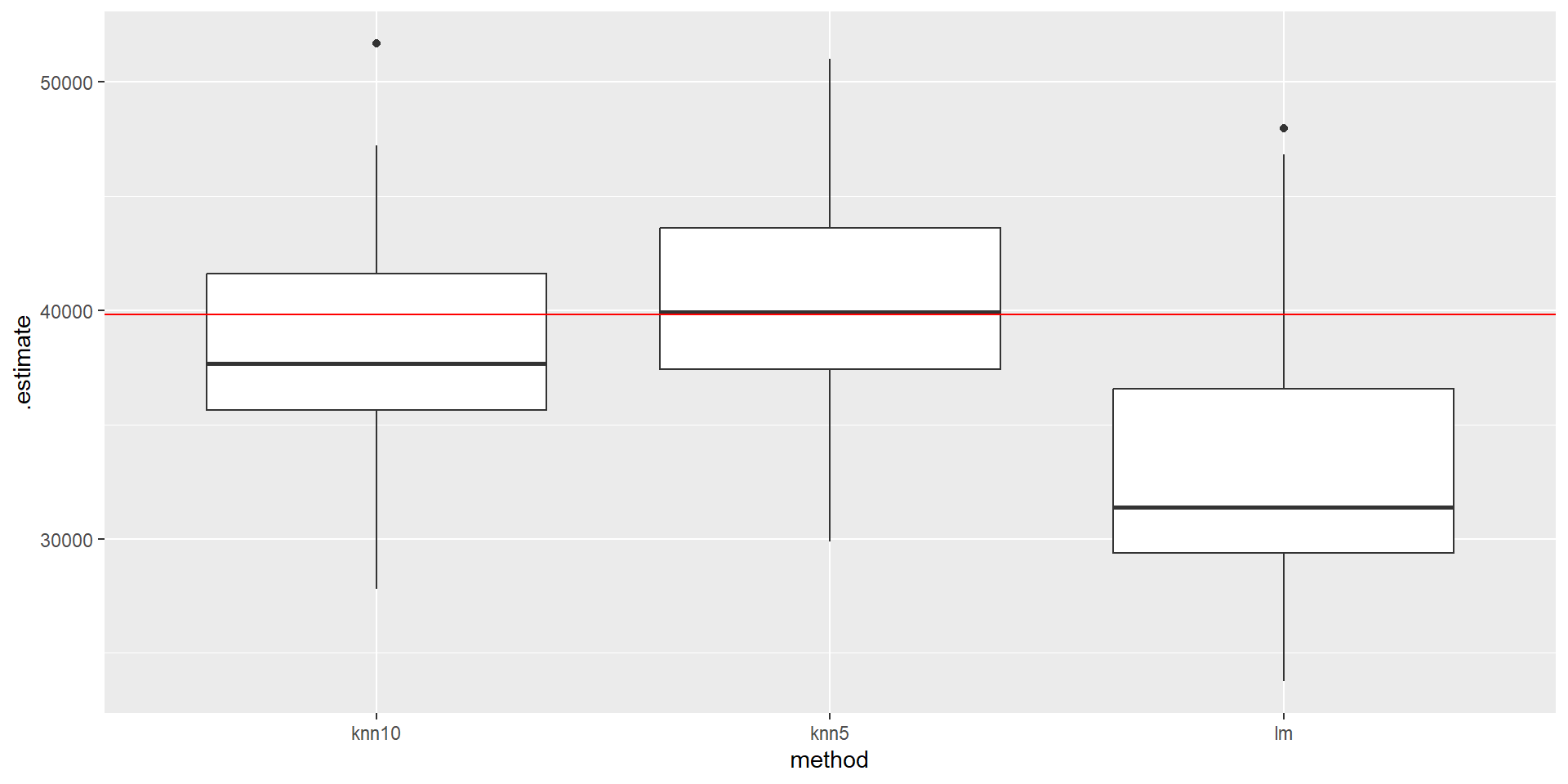

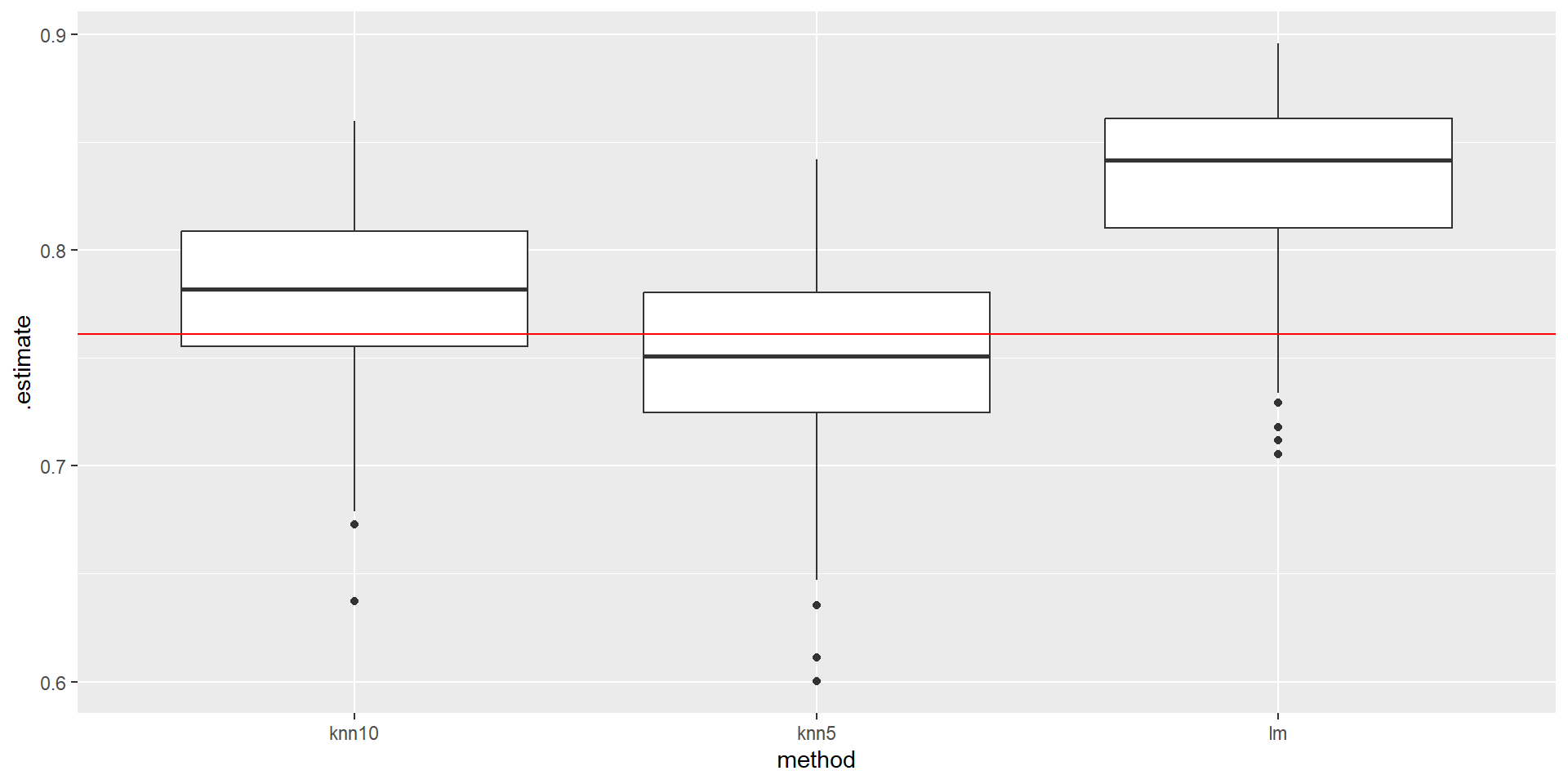

Visualizing CV Metrics

Pre-processing

Data: Different Ames Housing Prices

Goal: Predict Sale_Price.

Rows: 881

Columns: 20

$ Sale_Price <int> 244000, 213500, 185000, 394432, 190000, 149000, 149900, …

$ Gr_Liv_Area <int> 2110, 1338, 1187, 1856, 1844, NA, NA, 1069, 1940, 1544, …

$ Garage_Type <fct> Attchd, Attchd, Attchd, Attchd, Attchd, Attchd, Attchd, …

$ Garage_Cars <dbl> 2, 2, 2, 3, 2, 2, 2, 2, 3, 3, 2, 3, 3, 2, 2, 2, 3, 2, 2,…

$ Garage_Area <dbl> 522, 582, 420, 834, 546, 480, 500, 440, 606, 868, 532, 7…

$ Street <fct> Pave, Pave, Pave, Pave, Pave, Pave, Pave, Pave, Pave, Pa…

$ Utilities <fct> AllPub, AllPub, AllPub, AllPub, AllPub, AllPub, AllPub, …

$ Pool_Area <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ Neighborhood <fct> North_Ames, Stone_Brook, Gilbert, Stone_Brook, Northwest…

$ Screen_Porch <int> 0, 0, 0, 0, 0, 0, 0, 165, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

$ Overall_Qual <fct> Good, Very_Good, Above_Average, Excellent, Above_Average…

$ Lot_Area <int> 11160, 4920, 7980, 11394, 11751, 11241, 12537, 4043, 101…

$ Lot_Frontage <dbl> 93, 41, 0, 88, 105, 0, 0, 53, 83, 94, 95, 90, 105, 61, 6…

$ MS_SubClass <fct> One_Story_1946_and_Newer_All_Styles, One_Story_PUD_1946_…

$ Misc_Val <int> 0, 0, 500, 0, 0, 700, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0…

$ Open_Porch_SF <int> 0, 0, 21, 0, 122, 0, 0, 55, 95, 35, 70, 74, 130, 82, 48,…

$ TotRms_AbvGrd <int> 8, 6, 6, 8, 7, 5, 6, 4, 8, 7, 7, 7, 7, 6, 7, 7, 10, 7, 7…

$ First_Flr_SF <int> 2110, 1338, 1187, 1856, 1844, 1004, 1078, 1069, 1940, 15…

$ Second_Flr_SF <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 563, 0, 886, 656, 11…

$ Year_Built <int> 1968, 2001, 1992, 2010, 1977, 1970, 1971, 1977, 2009, 20…Today

Well cover some common pre-processing tasks:

- Dealing with zero-variance (zv) and/or near-zero variance (nzv) variables

- Imputing missing entries

- Label encoding ordinal categorical variables

- Standardizing (centering and scaling) numeric predictors

- Lumping predictors

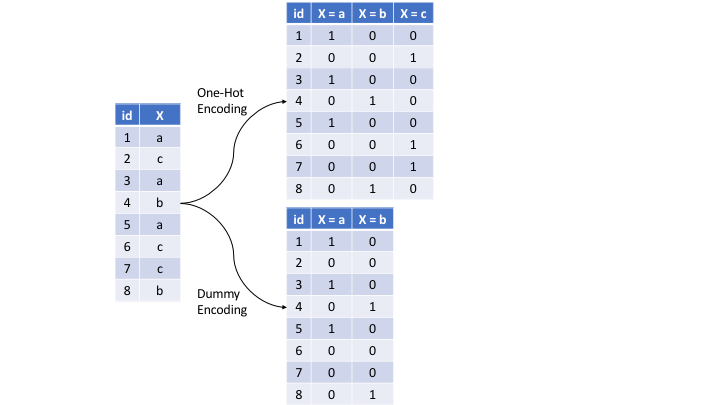

- One-hot/dummy encoding categorical predictor

Pre-Split Cleaning

- Before you split your data: make sure data is in correct format

- This may mean different things for different data sets

- Common examples:

- Fixing names of columns

- Ensure all variable types are correct

- Ensure all factor levels are correct and in order (if applicable)

- Remove any variables that are not important (or harmful) to your analysis

- Ensure missing values are coded as such (i.e. as

NAinstead of 0 or -1 or “missing”) - Filling in missing values where you know what the answer should be (i.e. if a missing value really means 0 instead of missing)

Example: Factor Levels in Wrong Order

Re-Factoring

ames <- ames |>

mutate(Overall_Qual = factor(Overall_Qual, levels = c("Very_Poor", "Poor",

"Fair", "Below_Average",

"Average", "Above_Average",

"Good", "Very_Good",

"Excellent", "Very_Excellent")))

ames |> pull(Overall_Qual) |> levels() [1] "Very_Poor" "Poor" "Fair" "Below_Average"

[5] "Average" "Above_Average" "Good" "Very_Good"

[9] "Excellent" "Very_Excellent"Zero-Variance (zv) and/or Near-Zero Variance (nzv) Variables

Heuristic for detecting near-zero variance features is:

- The fraction of unique values over the sample size is low (say ≤ 10%).

- The ratio of the frequency of the most prevalent value to the frequency of the second most prevalent value is large (say ≥ 20%).

| freqRatio | percentUnique | zeroVar | nzv | |

|---|---|---|---|---|

| Sale_Price | 1.000000 | 55.7321226 | FALSE | FALSE |

| Gr_Liv_Area | 1.333333 | 62.9965948 | FALSE | FALSE |

| Garage_Type | 2.196581 | 0.6810443 | FALSE | FALSE |

| Garage_Cars | 1.970213 | 0.5675369 | FALSE | FALSE |

| Garage_Area | 2.250000 | 38.0249716 | FALSE | FALSE |

| Street | 219.250000 | 0.2270148 | FALSE | TRUE |

| Utilities | 880.000000 | 0.2270148 | FALSE | TRUE |

| Pool_Area | 876.000000 | 0.6810443 | FALSE | TRUE |

| Neighborhood | 1.476744 | 2.9511918 | FALSE | FALSE |

| Screen_Porch | 199.750000 | 6.6969353 | FALSE | TRUE |

| Overall_Qual | 1.119816 | 1.1350738 | FALSE | FALSE |

| Lot_Area | 1.071429 | 79.7956867 | FALSE | FALSE |

| Lot_Frontage | 1.617021 | 11.5777526 | FALSE | FALSE |

| MS_SubClass | 1.959064 | 1.7026107 | FALSE | FALSE |

| Misc_Val | 141.833333 | 1.9296254 | FALSE | TRUE |

| Open_Porch_SF | 23.176471 | 19.2962543 | FALSE | FALSE |

| TotRms_AbvGrd | 1.311225 | 1.2485812 | FALSE | FALSE |

| First_Flr_SF | 1.777778 | 63.7911464 | FALSE | FALSE |

| Second_Flr_SF | 64.250000 | 31.3280363 | FALSE | FALSE |

| Year_Built | 1.175000 | 11.9182747 | FALSE | FALSE |

Recipe: Near-Zero Variance

Missing Data

- Many times, you can’t just drop missing data

- Even if you can, dropping missing values can generate biased data/models

- Sometimes missing data gives you more information

- Types of missing data:

- Missing completely at random (MCAR): there is no pattern to your missing values

- Missing at random (MAR): missing values are dependent on other values in the data set

- Missing not at random (MNAR): missing values are dependent on the value that is missing

- Structured missingness (SM): when the missingness of certain values are depends on one another, regardless of whether the missing values are MCAR, MAR, or MNAR

MCAR: Examples

- Sensor data: occasionally sensors break so you’re missing data randomly

- Survey data: sometimes people just randomly skip questions

- Survey data: customers are randomly given 5 questions from a bank of 100 questions

MAR: Examples

- Men are less likely to respond to surveys about depression

- Medical study: patients who miss follow-up appointments are more likely to be young

- Survey responses: ESL respondents may be more likely to skip certain questions that are difficult to interpret (only MAR if you know they are ESL)

- Measure of student performance: students who score lower are more likely to skip questions

MNAR: Examples

- Survey on income: respondent may be less likely to report their income if they are poor

- Survey about political beliefs: respondent may be more likely to skip questions when their answer is perceived as undesirable

- Customer satisfaction: only customers who feel strongly respond

- Medical study: patients refuse to report unhealthy habits

Structurally Missing: Examples

- Health survey: all questions related to pregnancy are left blank by males

- Bank data set: combination of home, auto, and credit cards… not all customer have all three so have missing data in certain portions

- Survey: many respondents by stop the survey early so all questions after a certain point are missing

- Netflix: customers may only watch similar movies and TV shows

Remedies for Missing Data

- Lot of complicated ways that you can read about

- Can drop column of too much of the data is missing

- Imputing:

step_impute_median: used for numeric (especially discrete) variablesstep_impute_mean: used for numeric variablesstep_impute_knn: used for both numeric and categorical variables (computationally expensive)step_impute_mode: used for nominal (having no order) categorical variable

Exploring Missing Data

Missing Data: Garage_Type

- The reason that

Garage_Typeis missing is because there is no basement- Solution: replace

NAs withNo_Garage - Do this before data splitting

- Solution: replace

Fixing Garage_Type

Missing Data: Year_Built

- MCAR

- Solution 1: Impute with mean or median

- Solution 2: Impute with KNN… maybe we can infer what the values are based on other values in the data set?

Missing Data: Gr_Liv_Area

- MCAR

- Solution 1: Impute with mean or median

- Solution 2: Impute with KNN… maybe we can infer what the values are based on other values in the data set?

Recipe: Missing Data

- Note:

step_imput_knnuses the “Gower’s Distance” so don’t need to worry about normalizing

Encoding Ordinal Features

Two types of categorical features:

- Ordinal (order is important)

- Nominal (order is not important)

Encoding Ordinal Features

[1] "Very_Poor" "Poor" "Fair" "Below_Average"

[5] "Average" "Above_Average" "Good" "Very_Good"

[9] "Excellent" "Very_Excellent"Very_Poor= 1,Poor= 2,Fair= 3, etc…

Recipe: Encoding Ordinal Features

Lump Small Categories Together

| Neighborhood | n |

|---|---|

| North_Ames | 127 |

| College_Creek | 86 |

| Old_Town | 83 |

| Edwards | 49 |

| Somerset | 50 |

| Northridge_Heights | 52 |

| Gilbert | 47 |

| Sawyer | 49 |

| Northwest_Ames | 41 |

| Sawyer_West | 31 |

| Mitchell | 33 |

| Brookside | 33 |

| Crawford | 22 |

| Iowa_DOT_and_Rail_Road | 28 |

| Timberland | 21 |

| Northridge | 22 |

| Stone_Brook | 17 |

| South_and_West_of_Iowa_State_University | 21 |

| Clear_Creek | 16 |

| Meadow_Village | 14 |

| Briardale | 10 |

| Bloomington_Heights | 10 |

| Veenker | 9 |

| Northpark_Villa | 3 |

| Blueste | 3 |

| Greens | 4 |

Lump Small Categories Together

Recipe: Lumping Small Factors Together

preproc <- recipe(Sale_Price ~ ., data = ames) |>

step_nzv(all_predictors()) |> # remove zero or near-zero variable predictors

step_impute_knn(Year_Built, Gr_Liv_Area) |> # impute missing values in Overall_Qual and Year_Built

step_integer(Overall_Qual) |> # convert Overall_Qual into ordinal encoding

step_other(Neighborhood, threshold = 0.01, other = "Other") # lump all categories with less than 1% representation into a category called Other for each variableOne-hot/dummy encoding categorical predictors

Figure 3.9: Machine Learning with R

Recipe: Dummy Variables

preproc <- recipe(Sale_Price ~ ., data = ames) |>

step_nzv(all_predictors()) |> # remove zero or near-zero variable predictors

step_impute_knn(Year_Built, Gr_Liv_Area) |> # impute missing values in Overall_Qual and Year_Built

step_integer(Overall_Qual) |> # convert Overall_Qual into ordinal encoding

step_other(Neighborhood, threshold = 0.01, other = "Other") |> # lump all categories with less than 1% representation into a category called Other for each variable

step_dummy(all_nominal_predictors(), one_hot = TRUE) # in general use one_hot unless doing linear regressionReceipe: Center and scale

preproc <- recipe(Sale_Price ~ ., data = ames) |>

step_nzv(all_predictors()) |> # remove zero or near-zero variable predictors

step_impute_knn(Year_Built, Gr_Liv_Area) |> # impute missing values in Overall_Qual and Year_Built

step_integer(Overall_Qual) |> # convert Overall_Qual into ordinal encoding

step_other(Neighborhood, threshold = 0.01, other = "Other") |> # lump all categories with less than 1% representation into a category called Other for each variable

step_dummy(all_nominal_predictors(), one_hot = TRUE) |> # in general use one_hot unless doing linear regression

step_normalize(all_numeric_predictors())Order of Preprocessing Step

Questions to ask:

- Should this be done before or after data splitting?

- If I do step_A first what is the impact on step_B? For example, do you want to encode categorical variables before or after normalizing?

- What data format is required by the model I’m fitting and how will my model react to these changes?

- Is this step part of my “model”? I.e. is this a decision I’m making based on the data or based on subject matter expertise?

- Do I have access to my test predictors?

Questions

- Should I lump before or after dummy coding?

- Should I dummy code before or after normalizing?

- Should I lump before my initial split?

- How does ordinal encoding impact linear regression vs. KNN?

Final R Workflow

Clean Data Set

ames <- ames |>

mutate(Overall_Qual = factor(Overall_Qual, levels = c("Very_Poor", "Poor",

"Fair", "Below_Average",

"Average", "Above_Average",

"Good", "Very_Good",

"Excellent", "Very_Excellent")),

Garage_Type = if_else(is.na(Garage_Type), "No_Garage", Garage_Type),

Garage_Type = as_factor(Garage_Type)

)Initial Data Split

Define Folds

# 10-fold cross-validation repeated 10 times

# A tibble: 100 × 3

splits id id2

<list> <chr> <chr>

1 <split [594/66]> Repeat01 Fold01

2 <split [594/66]> Repeat01 Fold02

3 <split [594/66]> Repeat01 Fold03

4 <split [594/66]> Repeat01 Fold04

5 <split [594/66]> Repeat01 Fold05

6 <split [594/66]> Repeat01 Fold06

7 <split [594/66]> Repeat01 Fold07

8 <split [594/66]> Repeat01 Fold08

9 <split [594/66]> Repeat01 Fold09

10 <split [594/66]> Repeat01 Fold10

# ℹ 90 more rowsDefine Model(s)

Define Preprocessing: Linear regression

lm_preproc <- recipe(Sale_Price ~ ., data = ames_train) |>

step_nzv(all_predictors()) |> # remove zero or near-zero variable predictors

step_impute_knn(Year_Built, Gr_Liv_Area) |> # impute missing values in Overall_Qual and Year_Built

step_integer(Overall_Qual) |> # convert Overall_Qual into ordinal encoding

step_other(all_nominal_predictors(), threshold = 0.01, other = "Other") |> # lump all categories with less than 1% representation into a category called Other for each variable

step_dummy(all_nominal_predictors(), one_hot = FALSE) |> # in general use one_hot unless doing linear regression

step_corr(all_numeric_predictors(), threshold = 0.5) |> # remove highly correlated predictors

step_lincomb(all_numeric_predictors()) # remove variables that have exact linear combinationsDefine Preprocessing: KNN

knn_preproc <- recipe(Sale_Price ~ ., data = ames_train) |>

step_nzv(all_predictors()) |> # remove zero or near-zero variable predictors

step_impute_knn(Year_Built, Gr_Liv_Area) |> # impute missing values in Overall_Qual and Year_Built

step_integer(Overall_Qual) |> # convert Overall_Qual into ordinal encoding

step_other(all_nominal_predictors(), threshold = 0.01, other = "Other") |> # lump all categories with less than 1% representation into a category called Other for each variable

step_dummy(all_nominal_predictors(), one_hot = TRUE) |> # in general use one_hot unless doing linear regression

step_nzv(all_predictors()) |>

step_normalize(all_numeric_predictors())Define Workflows

Define Metrics

Fit and Assess Models

Collecting Metrics

| .metric | .estimator | mean | n | std_err | .config |

|---|---|---|---|---|---|

| rmse | standard | 3.968417e+04 | 100 | 764.5524121 | Preprocessor1_Model1 |

| rsq | standard | 7.559368e-01 | 100 | 0.0088924 | Preprocessor1_Model1 |

| .metric | .estimator | mean | n | std_err | .config |

|---|---|---|---|---|---|

| rmse | standard | 3.999696e+04 | 100 | 956.4824660 | Preprocessor1_Model1 |

| rsq | standard | 7.596873e-01 | 100 | 0.0062576 | Preprocessor1_Model1 |

| .metric | .estimator | mean | n | std_err | .config |

|---|---|---|---|---|---|

| rmse | standard | 3.952087e+04 | 100 | 1008.0467220 | Preprocessor1_Model1 |

| rsq | standard | 7.681888e-01 | 100 | 0.0065365 | Preprocessor1_Model1 |

Final & Evaluate Final Model

- After choosing best model/workflow, fit on full training set and assess on test set

Tips

- Can try out different pre-processing to see if it improves your model!

- Process can be intense for you computer, so might take a while

- No 100% correct way to do it, although there are some 100% incorrect ways to do it